I see. Makes sense.

Avid Amoeba

- 9 Posts

- 384 Comments

4·21 days ago

4·21 days agoSounds a bit like the drug dealer’s business model.

7·21 days ago

7·21 days agoI think federated non-profit video platforms won’t work on large scale without P2P.

8·21 days ago

8·21 days agoNot noticeable with always-on Tailscale with the default split-tunnel mode. That is when Tailscale is only used to access Tailscale machines and everything else is routed via the default route.

The distance and the budget call for 75-85". I have had good luck with an entry level Sony. I bought an X85K 85" two years ago. It’s one step above the cheapest model. The cheapest model was an IPS panel which had a bit shittier performance. I don’t recall in what regard. X85K is a Samsung panel. It’s a standard no-nonsense LED side backlight which is very robust over time. The original unit was a lemon. It developed a line across the screen. I got it replaced under warranty. The second unit has been flawless so far. Software-wise, it’s a bog-standard Android TV/Google TV. Doesn’t require Internet connection to setup. With that said I use a CCwGTV with it and I don’t hear a peep from the TV OS about anything. The only thing I didn’t like about it was the price at the time. I bought it straight from Sony, they ship from GTA. It was available from other retailers too. I think this model might still be current actually. I still see it on Sony’s web along with another one - X85J.

Prior to that I had an entry level Samsung 75". It was fine too. Didn’t require Internet. The panel was a similar affair, slightly worse in terms of blacks and uniformity but I’m sure that wasn’t due to its Samsungness but was simply a cheaper panel than what Sony uses.

2·30 days ago

2·30 days agocross device syncing between desktop and phone

This is me

The VPN should keep access to the homelab even when the external IP changes. Assuming the VPN connects from the homelab to the cloud. The reverse proxy would use the VPN local IPs to connect to services.

3·1 month ago

3·1 month agoIf you’re switching low power inconsequential things like LED lights, they’re OK.

9·1 month ago

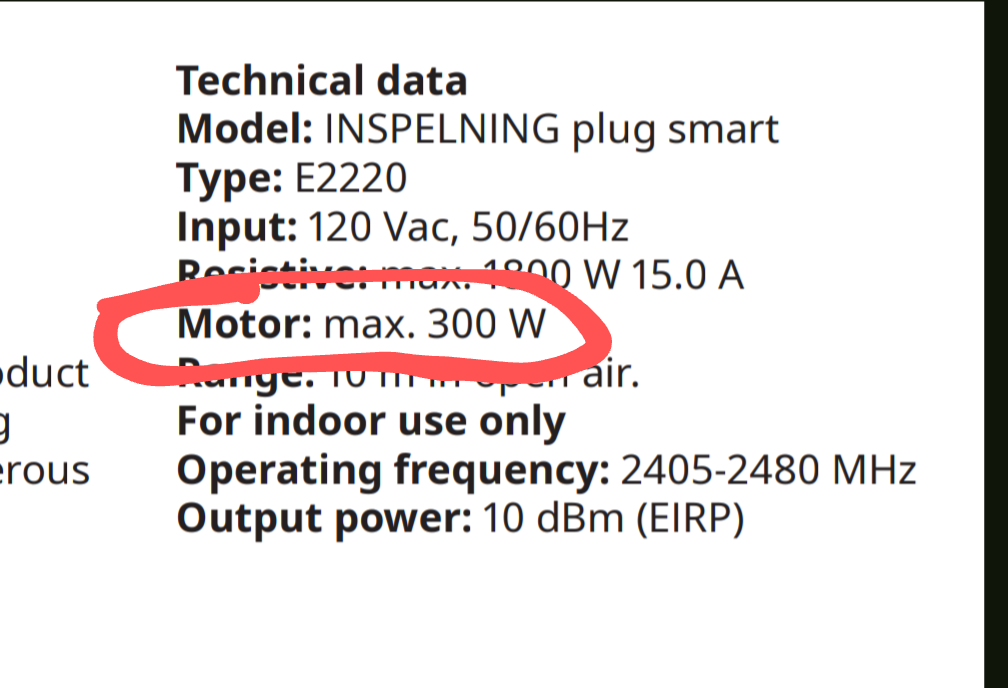

9·1 month agoThis like most plugs in this format is not for inductive loads so it can only handle 300W with such:

It might be OK if the AC units are small enough.

5·1 month ago

5·1 month agoIf you’re gonna be switching AC units, you likely want a plug that can switch inductive loads. Most can’t. Well they can but their relays crap out quickly. Here’s an example of a unit rated for inductive loads. It’s for NA and uses Z-wave so it’s not what you’re looking for. They explicitly call out it can be used for AC motors. Some units explicitly say they can’t be used for inductive loads but many don’t and you learn the hard way.

Thanks! I’ve been running 5x16T from SPD for over 6 months with zero issues.

23·1 month ago

23·1 month agoAnd they package drives correctly.

8·1 month ago

8·1 month agoI don’t know if SPD ships to where you are but a manufacturer recertified 16TB from them goes for ~$160. I have 7 drives from them so far, 5 in continuous use since spring, no issues so far.

It might also save it from shit controllers and cables which ECC can’t help with. (It has for me)

Unless you need RAID 5/6, which doesn’t work well on btrfs

Yes. Because they’re already using some sort of parity RAID so I assume they’d use RAID in ZFS/Btrfs and as you said, that’s not an option for Btrfs. So LVMRAID + Btrfs is the alternative. LVMRAID because it’s simpler to use than mdraid + LVM and the implementation is still mdraid under the covers.

And you probably know that sync writes will shred NAND while async writes are not that bad.

This doesn’t make sense. SSD controllers have been able to handle any write amplification under any load since SandForce 2.

Also most of the argument around speed doesn’t make sense other than DC-grade SSDs being expected to be faster in sustained random loads. But we know how fast consumer SSDs are. We know their sequential and random performance, including sustained performance - under constant load. There are plenty benchmarks out there for most popular models. They’ll be as fast as those benchmarks on average. If that’s enough for the person’s use case, it’s enough. And they’ll handle as many TB of writes as advertised and the amount of writes can be monitored through SMART.

And why would ZFS be any different than any other similar FS/storage system in regards to random writes? I’m not aware of ZFS generating more IO than needed. If that were the case, it would manifest in lower performance compared to other similar systems. When in fact ZFS is often faster. I think SSD performance characteristics are independent from ZFS.

Also OP is talking about HDDs, so not even sure where the ZFS on SSDs discussion is coming from.

Not sure where you’re getting that. Been running ZFS for 5 years now on bottom of the barrel consumer drives - shucked drives and old drives. I have used 7 shucked drives total. One has died during a physical move. The remaining 6 are still in use in my primary server. Oh and the speed is superb. The current RAIDz2 composed of the shucked 6 and 2 IronWolfs does 1.3GB/s sequential reads and write IOPS at 4K in the thousands. Oh and this is all happening on USB in 2x 4-bay USB DAS enclosures.

That doesn’t sound right. Also random writes don’t kill SSDs. Total writes do and you can see how much has been written to an SSD in its SMART values. I’ve used SSDs for swap memory for years without any breaking. Heavily used swap for running VMs and software builds. Their total bytes written counters were increasing steadily but haven’t reached the limit and haven’t died despite the sustained random writes load. One was an Intel MacBook onboard SSD. Another was a random Toshiba OEM NVMe. Another was a Samsung OEM NVMe.

It’s a trap.

- A message from Canada