With free esxi over, not shocking bit sad, I am now about to move away from a virtualisation platform i’ve used for a quarter of a century.

Never having really tried the alternatives, is there anything that looks and feels like esxi out there?

I don’t have anything exceptional I host, I don’t need production quality for myself but in all seriousness what we run at home end up at work at some point so there’s that aspect too.

Thanks for your input!

- KVM/QEMU/Libvirt/virt-manager on a Debian 12 for minimal installation that allows you to choose backup tools and the like on your own.

- Proxmox for a mature KVM-based virtualizer with built in tools for backups, clustering, etcetera. Also supports LXC. https://github.com/proxmox

- Incus for LXC/KVM virtualization - younger solution than Proxmox and more focused on LXC. https://github.com/lxc/incus

/thread

This is my go-to setup.

I try to stick with libvirt/

virshwhen I don’t need any graphical interface (integrates beautifully with ansible [1]), or when I don’t need clustering/HA (libvirt does support “clustering” at least in some capability, you can live migrate VMs between hosts, manage remote hypervisors from virsh/virt-manager, etc). On development/lab desktops I bolt virt-manager on top so I have the exact same setup as my production setup, with a nice added GUI. I heard that cockpit could be used as a web interface but have never tried it.Proxmox on more complex setups (I try to manage it using ansible/the API as much as possible, but the web UI is a nice touch for one-shot operations).

Re incus: I don’t know for sure yet. I have an old LXD setup at work that I’d like to migrate to something else, but I figured that since both libvirt and proxmox support management of LXC containers, I might as well consolidate and use one of these instead.

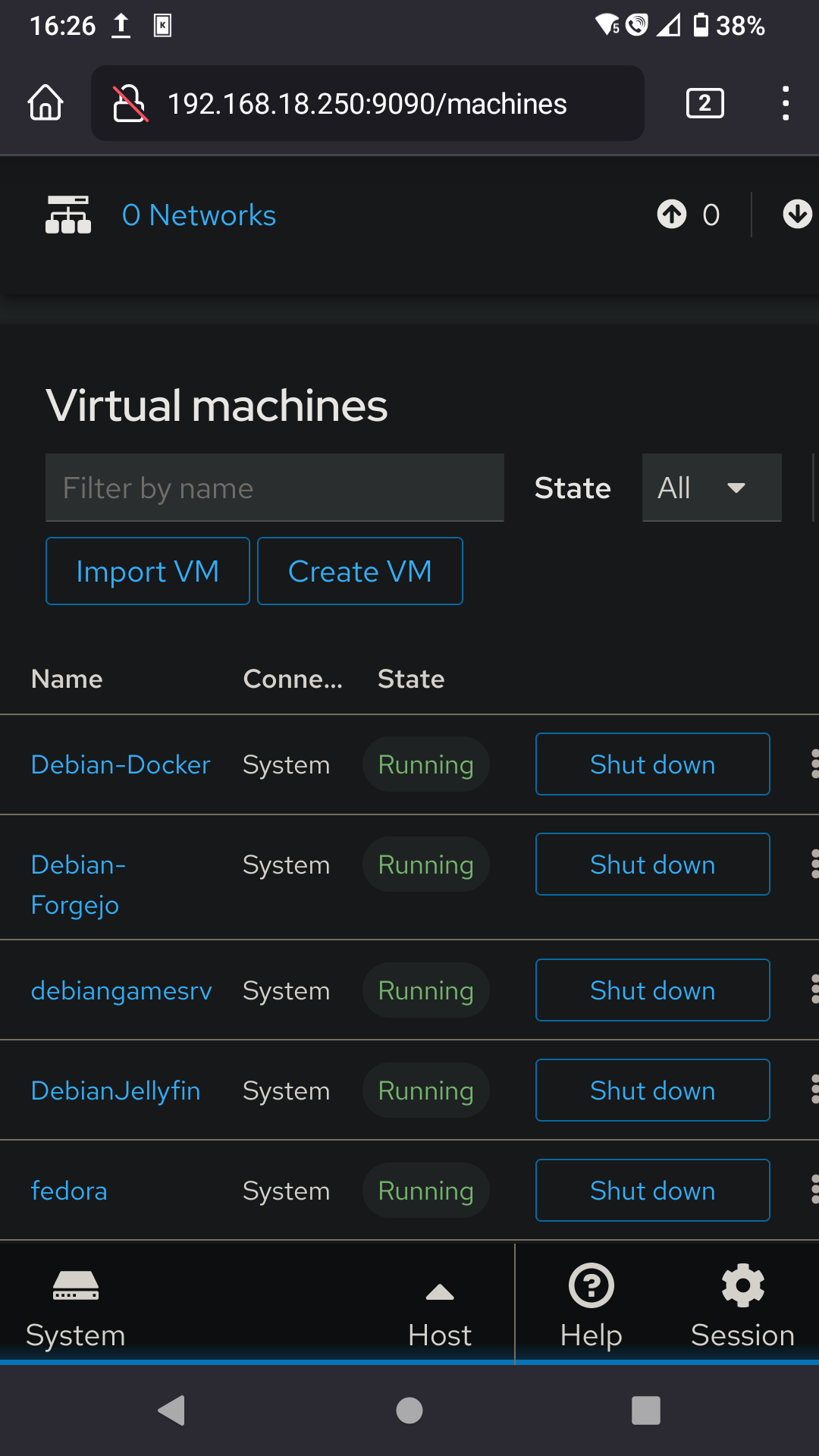

I use cockpit and my phone to start my virtual fedora, which has pcie passthrough on gpu and a usb controller.

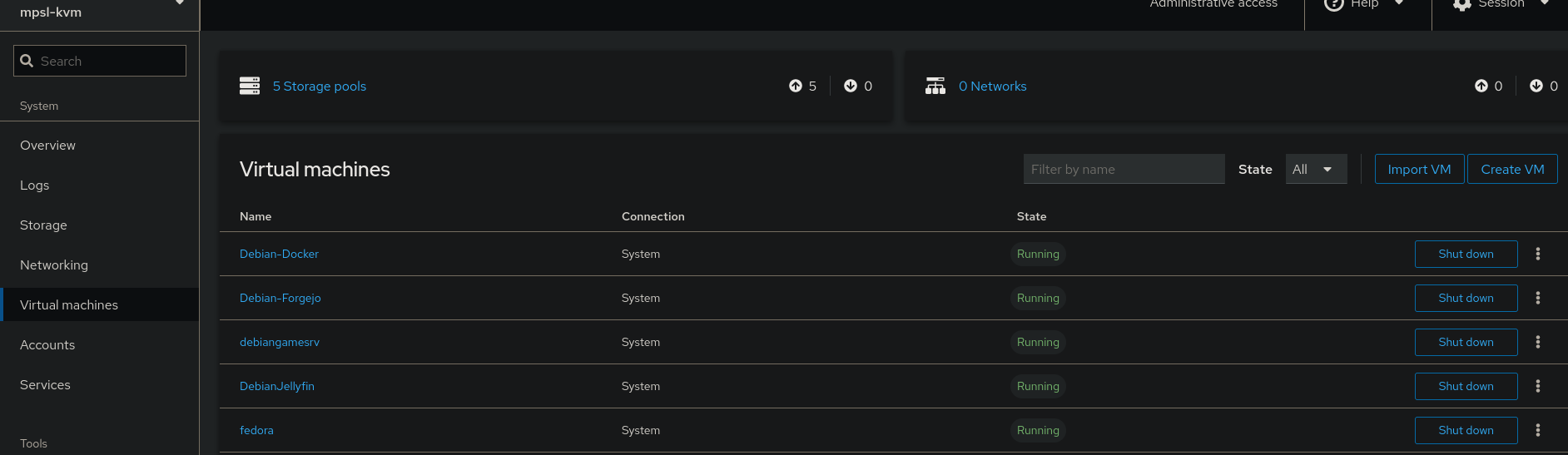

Desktop:

Mobile:

We use cockpit at work. It’s OK, but it definitely feels limited compared to Proxmox or Xen Orchestra.

Red Hat’s focus is really on Openstack, but that’s more of a cloud virtualization platform, so not all that well suited for home use. It’s a shame because I really like Cockpit as a platform. It just needs a little love in terms of things like the graphical console and editing virtual machine resources.

Ooh, didn’t know libvirt supported clusters and live migrations…

I’ve just setup Proxmox, but as it’s Debian based and I run Arch everywhere else, then maybe I could try that… thanks!

In my experience and for my mostly basic needs, major differences between libvirt and proxmox:

- The “clustering” in libvirt is very limited (no HA, automatic fencing, ceph inegration, etc. at least out-of-the box), I basically use it to 1. admin multiple libvirt hypervisors from a single libvirt/virt-manager instance 2. migrate VMs between instances (they need to be using shared storage for disks, etc), but it covers 90% of my use cases.

- On proxmox hosts I let proxmox manage the firewall, on libvirt hosts I manage it through firewalld like any other server (+ libvirt/qemu hooks for port forwarding).

- On proxmox I use the built-in template feature to provision new VMs from a template, on libvirt I do a mix of

virt-cloneandvirt-sysprep. - On libvirt I use

virt-installand a Debian preseed.cfg to provision new templates, on proxmox I do it… well… manually. But both support cloud-init based provisioning so I might standardize to that in the future (and ditch templates)

My understanding is that for proper cluster management you slap Pacemaker on there.

LXD/Incus provides a management and automation layer that really makes things work smoothly essentially replacing Proxmox. With Incus you can create clusters, download, manage and create OS images, run backups and restores, bootstrap things with cloud-init, move containers and VMs between servers (even live sometimes) and those are just a few things you can do with it and not with pure KVM/libvirt. Also has a WebUI for those interested.

A big advantage of LXD is the fact that it provides a unified experience to deal with both containers and VMs, no need to learn two different tools / APIs as the same commands and options will be used to manage both. Even profiles defining storage, network resources and other policies can be shared and applied across both containers and VMs.

Incus isn’t about replacing existing virtualization techniques such as QEMU, KVM and libvirt, it is about augmenting them so they become easier to manage at scale and overall more efficient. It plays on the land of, let’s say, Proxmox and I can guarantee you that most people running it today will eventually move to Incus and never look back. It woks way better, true open-source, no bugs, no holding back critical fixes for paying users and way less overhead.

they need to be using shared storage for disks

You can perform a live migration without shared storage with libvirt

I should RTFM again… https://manpages.debian.org/bookworm/libvirt-clients/virsh.1.en.html has options for

virsh migratesuch as--copy-storage-all… Not sure how it would work for actual live migrations but I will definitely check it out. Thanks for the hintPretty darn well. I actually needed to do some maintenance on the server earlier today so I just migrated all of the VMs over to my desktop, did the server maintenance, and then moved the VMs back over to the server, all while live and functioning. Running ping in the background looks like it missed a handful of pings as the switches figured their life out and then was right back where they were; not even long enough for uptime-kuma to notice.

I didn’t know libvirt supported HA

Did you read? I specifically said it didn’t, at least not out-of-the-box.

libvirt does support “clustering” at least in some capability, you can live migrate VMs between hosts

clustering != HA

The “clustering” in libvirt is limited to remote controlling multiple nodes, and migrating hosts between them. To get the High Availability part you need to set it up through other means, e.g. pacemaker and a bunch of scripts.

Re incus: I don’t know for sure yet. I have an old LXD setup at work that I’d like to migrate to something else, but I figured that since both libvirt and proxmox support management of LXC containers, I might as well consolidate and use one of these instead.

Maybe you should consider consolidating into Incus. You’re already running on LXC containers why keep using and dragging all the Proxmox bloat and potential issues when you can use LXD/Incus made by the same people who made LXC that is WAY faster, stable, more integrated and free?

Hey look, it’s the Incus guy. Every time this topic comes up, you chime in and roast Proxmox and it potential issues with a link go a previous comment roasting Proxmox and it’s potential issues and at no point go into what those potential issues are outside of the broad catch all term of ‘bloat’.

I respect your data center experience, but I wish you were more forward with your issues instead of broad, generalized terms.

As someone with much less enterprise experience, but small business it administration experience, how does Incus replace ESXi for virtual machines coming from the understanding that “containerization is the new hotness but doesn’t work for me” angle?

deleted by creator

deleted by creator

You funny guy 😂😂

Now, I’m on my phone so I can’t write that much but I’ll say that the post I liked to isn’t about potential issue, it goes over specific situations where it failed, ZFS, OVPN, etc. but I won’t obviously provide anyone with crash logs and kernel panics.

About ESXi: Incus provides you with a CLI and Web interface to create, manage, migrate VMs. It also provides basic clustering features. It isn’t as feature complete as ESXi but it gets the job done for most people who just want a couple of VMs. At the end of the day it is more inline with what Proxmox than what ESXi offers BUT it’s effectively free so it won’t hold important updates from users running on free licenses.

If you list what you really need in terms of features I can point you into documentation or give my opinion how how they compare and what to expect.

The migration is bound to happen in the next few months, and I can’t recommend moving to incus yet since it’s not in stable/LTS repositories for Debian/Ubuntu, and I really don’t want to encourage adding third-party repositories to the mix - they are already widespread in the setup I inherited (new gig), and part of a major clusterfuck that is upgrade management (or the lack of). I really want to standardize on official distro repositories. On the other hand the current LXD packages are provided by snap (…) so that would still be an improvement, I guess.

Management is already sold to the idea of Proxmox (not by me), so I think I’ll take the path of least resistance. I’ve had mostly good experiences with it in the past, even if I found their custom kernels a bit strange to start with… do you have any links/info about the way in which Proxmox kernels/packages differ from Debian stable? I’d still like to put a word of caution about that.

DO NOT migrate / upgrade anything to the snap package that package is from Canonical and it’s after the Incus fork, this means if you do for it you may never be able to then migrate to Incus and/or you’ll become hostage of Canonical.

About the rest, if you don’t want to add repositories you should migrate into LXD LTS from Debian 12 repositories. That version is and will be compatible with Incus and both the Incus and Debian teams have said that multiple times and are working on a migration path. For instance the LXD from Debian will still be able to access the Incus image server while the Canonical one won’t.

DO NOT migrate / upgrade anything to the snap package

It was already in place when I came in (made me roll my eyes), and it’s a mess. As you said, there’s no proper upgrade path to anything else. So anyway…

you should migrate into LXD LTS from Debian 12 repositories

The LXD version in Debian 12 is buggy as fuck, this patch has not even been backported https://github.com/canonical/lxd/issues/11902 and 5.0.2-5 is still affected. It was a dealbreaker in my previous tests, and doesn’t inspire confidence in the bug testing and patching process on this particular package. On top of it, It will be hard to convice other guys that we should ditch Ubuntu and their shenanigans, and that we should migrate to good old Debian (especially if the lxd package is in such a state). Some parts of the job are cool, but I’m starting to see there’s strong resistance to change, so as I said, path of least resistance.

Do you have any links/info about the way in which Proxmox kernels/packages differ from Debian stable?

So you say it is “buggy as fuck” because there’s a bug that makes it so you can’t easily run it if your locate is different than English? 😂 Anyways you can create the bride yourself and get around that.

About the link, Proxmox kernel is based on Ubuntu, not Debian…

“buggy as fuck” because there’s a bug that makes it so you can’t easily run it if your locate is different than English?

It sends pretty bad signals when it causes a crash on the first

lxd init(sure I could make the case that there are workarounds, switch locales, create the bridge, but it doesn’t help make it appear as a better solution than proxmox). Whatever you call it, it’s a bad looking bug, and the fact that it was not patched in debian stable or backports makes me think there might be further hacks needed down the road for other stupid bugs like this one, so for now, hard pass on the Debian package (might file a bug on the bts later).About the link, Proxmox kernel is based on Ubuntu, not Debian…

Thanks for the link mate, Proxmox kernels are based on Ubuntu’s, which are in turn based on Debian’s, not arguing about that - but I was specifically referring to this comment

having to wait months for fixes already available upstream or so they would fix their own shit

any example/link to bug reports for such fixes not being applied to proxmox kernels? Asking so I can raise an orange flag before it gets adopted without due consideration.

This is what I would recommend too - QEMU + libvirt with Sanoid for automatic snapshot management. Incus is also a solid option too

Also VirtualBox.

They’re obviously looking for a type 1 hypervisor like Esxi. A type 2 hypervisor like virtualbox does not fit the bill.

What is the difference between type 1 & 2 please ?

Type 1 runs on bare metal. You install it directly onto server hardware. Type 2 is an application (not an OS) lives inside an OS, regardless of whether that OS is a guest or a host, the hypervisor is a guest of that platform, and the VMs inside it are guests of that hypervisor.

Thank you

The previous comment is an excellent summary. It is worth noting that there are some type 1 hypervisors that can look like type 2s. Specifically, KVM in Linux (which sometimes gets referred to as Virt-manager, Virtual Machine Manager, or VMM, after the program typically used to manage it) and Hyper-V in Windows.

These get mistaken for type 2 hypervisors because they run inside of your normal OS, rather than being a dedicated platform that you install in place of it. But the key here is that the hypervisor itself (that is, the software that actually runs the VM) is directly integrated into the underlying operating system. You were installing a hypervisor OS the whole time, you just didn’t realise it.

The reason this matters is that type 1 hypervisors can operate at the kernel level, meaning they can directly manage resources like your memory, CPU and graphics. Type 2 hypervisors have to queue with all the other pleb software to request access to these resources from the OS. This means that type 1 hypervisors will generally offer better performance.

With hypervisor platforms like Proxmox, Esxi, Hyper-V server core, or XCP-NG, what you get is a type 1 hypervisor with an absolutely minimal OS built around it. Basically, just enough software to the job of running VMs, and nothing else. Like a drag racer.

VB is awful.

And I use it every day.

It’s like a first-try at a hypervisor. Terrible UI, with machine config scattered around. Some stuff can only be done on the command line after you search the web for how to do it (like basic stuff, say run headless by default). Enigmatic error messages.

Proxmox works well for me

deleted by creator

This is the way

If you’re running mostly Linux vms proxmix us really good. It’s based on kvm and has a really nice feature set.

Windows guests also run fine on KVM, use the Virtio drivers from Fedora project.

I’ve used Hyper-V and in fact moved away from ESXi long ago. VMWare had amazing features but we could not justify the ever-increasing costs. Hyper-V can do just about anything VMWare can do if you know Powershell.

Seconded for Hyper-V, and MUCH easier to patch the free edition than ESXi.

Another vote for Hyper-V. Moved to it from ESXi at home because I had to manage a LOT of Hyper-V hosted machines at work, so I figured I’d may as well get as much exposure to it as I could. Works fine for what I need.

I use it with WAC on my home server and it’s good enough for anything I need to do. Easy to create VMs using that UI, PS not even needed.

I’m pretty happy with XCP-ng with their XenOrchestra management interface. XenOrchestra has a free and enterprise version, but you can also compile it from source to get all the enterprise features. I’d recommend this script: https://github.com/ronivay/XenOrchestraInstallerUpdater

I’d say it’s a slightly more advanced ESXi with vCenter and less confusing UI than Proxmox.

I second this and would like to add in another resource for XCP-ng : Lawrence Systems on YouTube.

Qemu/virt manager. I’ve been using it and it’s so fast. I still need to get the clipboard sharing working but as of right now it’s the best hypervisor I’ve ever used.

I love it. Virtmanager connecting over ssh is so smooth.

I actually moved everything to docker containers at home… Not an apples to apples, but I don’t need so many full OSs it turns out.

At work we have a mix of things running right now to see. I don’t think we’ll land on ovirt or openstack. It seems like we’ll bite the cost bullet and move all the important services to amazon.

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I’ve seen in this thread:

Fewer Letters More Letters DNS Domain Name Service/System ESXi VMWare virtual machine hypervisor HA Home Assistant automation software ~ High Availability LTS Long Term Support software version LXC Linux Containers ZFS Solaris/Linux filesystem focusing on data integrity k8s Kubernetes container management package

[Thread #540 for this sub, first seen 24th Feb 2024, 11:35] [FAQ] [Full list] [Contact] [Source code]

I do do not not know— however, that logo is amazing

EDIT: Found it — https://sega-ai.neocities.org/

OOTL and someone who only uses a vm once every several years for shits & grins: What happened to vmware?

As part of the transition of perpetual licensing to new subscription offerings, the VMware vSphere Hypervisor (Free Edition) has been marked as EOGA (End of General Availability). At this time, there is not an equivalent replacement product available.

For further details regarding the affected products and this change, we encourage you to review the following blog post: https://blogs.vmware.com/cloud-foundation/2024/01/22/vmware-end-of-availability-of-perpetual-licensing-and-saas-services/

Whelp…boo-urns. :(

I know everyone says to use Proxmox, but it’s worth considering xcp-ng as well.

In my “testing” at work and private, PVE is miles ahead of xcp-ng n terms of performance. Sure, xcp-ng does it’s thing very stable, but everything else…proxmox is faster

I agree that Proxmox VE is better; I’m just saying that people should compare multiple options and pick the one they like the best.

I’m using Unraid on my home server because it can run Docker containers in addition to KVM and LXC (via a plugin).

If you are dipping toes into containers with kvm and proxmox already, then perhaps you could jump into the deep end and look at kubernetes (k8s).

Even though you say you don’t need production quality. It actually does a lot for you and you just need to learn a single API framework which has really great documentation.

Personally, if I am choosing a new service to host. One of my first metrics in that decision is how well is it documented.

You could also go the simple route and use docker to make containers. However making your own containers is optional as most services have pre built ones that you can use.

You could even use auto scaling to run your cluster with just 1 node if you don’t need it to be highly available with a lot of 9s in uptime.

The trickiest thing with K8s is the networking, certs and DNS but there are services you can host to take care of that for you. I use istio for networking, cert-manager for certs and external-dns for DNS.

I would recommend trying out k8s first on a cloud provider like digital ocean or linode. Managing your own k8s control plane on bare metal has its own complications.

There are also full-suites like rancher which will abstract away a lot of the complexity

K8s is great, but you’re chaning the subject and not answering OPs question. Containers =/= VMs.

You are right. But proxmox and many of the other suggestions aren’t vms either.

For home have a crack at KVM with front ends like proxmox or canonical lxd manager.

In an enterprise environment take a look at Hyper-V or if you think you need hyper converged look at Nutanix.

Coming from a decade of vmware esxi and then a few years certified Nutanix, I was almost instantly at home clustering proxmox then added ceph across my hosts and went ‘wtf did I sell Nutanix for’. I was already running FreeNAS later truenas by then so I was already converted to hosting on Linux but seriously I was impressed.

Business case: With what you save on licensing for Nutanix or vsan, you can place all nvme ssd and run ceph.